Upgrade & Secure Your Future with DevOps, SRE, DevSecOps, MLOps!

We spend hours on Instagram and YouTube and waste money on coffee and fast food, but won’t spend 30 minutes a day learning skills to boost our careers.

Master in DevOps, SRE, DevSecOps & MLOps!

Learn from Guru Rajesh Kumar and double your salary in just one year.

Source:- 1reddrop.com

Hybrid cloud adoption is on a roll, and we think the root cause for the mass migration is none other than hybrid’s more sociable sibling, public cloud. Report after report points to the fact that there is a huge shift happening towards the hybrid cloud adoption.

Though, at 1redDrop, we hold a strong belief that there will be very few companies ten years from now that will be operating their own datacenters, at this point, hybrid cloud makes the most sense because, believe it or not, the world of cloud computing has a huge pending To-Do list that is preventing a massive chunk of the business world from moving to Public cloud.

Here are what we’ve identified as the top 10 reasons behind the new wave of migration towards hybrid cloud, with a description of each below this list:

- Vendor Lock-in, Switching Costs and Complexity

- Fear of Outages

- Migration Challenges

- Public Cloud Cost Component

- Existing Infrastructure and Investments in IT

- Lack of Talent

- Multi-cloud is Easy to Talk About but Hard to Implement

- Compliance and Regulatory Concerns

- Security Angst

- Hybrid Offers the Biggest Cloud Benefits with the Fewest Headaches

Vendor Lock-in, Switching Costs and Complexity

Aside from security, this is, by far, the biggest fear that companies have when it comes to public cloud. The bigger the company, the bigger the fear. Here are some of the questions IT professionals ask themselves when considering a shift to cloud:

- What if I develop everything for the platform and then the cloud service provider starts increasing costs?

- What if a competing cloud provider becomes ten times better than the service provider I have chosen?

- Will I be able to quickly move all my data and applications to another vendor for any reason?

Big companies operate in petabytes. Migration has to be carefully planned, and implementation is never easy. In fact, it might take months, if not weeks. And let’s not forget the cost of switching from one provider to another.

This is a problem that the industry as a whole needs to address if they want widespread adoption, and that means a lot of responsibility lies on the shoulders of Amazon and Microsoft, who are leading the race.

Unfortunately, Microsoft and Amazon seem to be okay with the status quo, and it’s understandable because they are the two alpha dogs in the cloud race.

But for Google, the underdog, there is a clear problem with the current solution, and the company is seizing that opportunity. Google has been aggressive about making it easy to handle multi-cloud, allowing clients to work with multiple cloud vendors as a matter of practice.

Fear of Outages

This is a real problem, but to put the onus of that on cloud service providers alone is not going to resolve anything. If there’s one thing that’s guaranteed in technology, it is that things fail all the time. There is no guarantee that your own datacenter will never go down, or one of your employees won’t break something with a simple typo. Just because you handle all your infrastructure, does not mean that your have fool-proofed yourself from outages.

The challenge for public cloud vendors is that their clients tend to have somewhat unrealistic expectations around outages, more so because there’s a sacred “service level agreement” in place. But even a 99.9 percent uptime commitment, if honored, means 8.76 hours of downtime – a third of one whole day – over a full calendar year. For certain types of applications, that can be disastrous, to say the least.

The recent Amazon outage was so massive that it literally took down the Internet along with it, and it took several hours before things got back to a normal state – but it was only about four hours, and just look at the havoc it wreaked.

If you’re running a consumer-facing application, you just cannot afford to have such outages. Though it can happen to anyone, the fear that third-party infrastructure service can go down does keep companies away from embracing public cloud. And things like the recent Amazon outage – not to mention the widespread publicity that came along with it – only increase that fear.

Migration Challenges

Migration remains a big hurdle for companies that are considering public cloud. Once again, the larger your database, the larger the problem is. If you have a live application with millions of customers, it will be a daunting task to migrate from your existing infrastructure to public cloud. You will have to plan every single detail, organise your resources and then hope that the actual execution part goes your way.

If you have been in IT for any amount of time, you would obviously know that there is always scope for things to go wrong. In fact, we can say with 100% certainty that no matter how much you plot and plan, something invariably goes wrong at some point during the operation. And CTOs who know this will always be wary of taking unwanted trouble on their heads.

On the positive side, however, it is most definitely possible. Evernote, the cross-platform workplace app, moved to Google Cloud Platform recently. It took them 70 days to move 3PB of data into Google Cloud. Evernote said that it started off small, by transporting a few items at a time, and then accelerated once they were 100% confident. It’s not impossible, but it is a difficult task to achieve, no doubt.

Public Cloud Cost Component

Amazon, Microsoft and Google Cloud are slashing cloud service prices on a regular basis. During a recent analysis, we found that Amazon (AWS) – between October 2008 and November 2016 – cut its S3 pricing from $0.15/GB to $ 0.026/GB, an 82.67% cut. More recently, Microsoft slashed its L Series VM costs by up to 69%, and Google Cloud Platform’s stated philosophy is: “Affordable on-demand prices and a commitment to Moore’s Law.”

Though all the cloud service providers are aggressively cutting costs, they have a great distance to cover before getting cloud prices to a level where it makes no economic sense to continue with the on-premise model.

See what Google Cloud Platform says on its webpage:

“Talking to our customers, we have heard several clear messages. First, Cloud pricing is too high to be a long-term replacement for the premise-based model. Prices need to come down — not just initially but over time.” – GCP

Bank of America CTO David Reilly told Brandon Butler, Senior Editor of Network World:

“When we benchmark the price points in the public cloud against what we’re able to provide internally – and we have years of benchmarking under our belt now – the economic delta’s just not there yet,” he says. “There’s no economic reason for us to move to the public cloud.”

The point at which even hybrid cloud doesn’t make economic sense is still a long way off, which is why larger companies prefer their own private cloud or take the hybrid route.

Existing Infrastructure and Investments in IT

Though we don’t hear much about this as a hurdle to public cloud, it’s a very real one – and one that heavily influences the decision-making process. According to recent data from Gartner, in 2016, traditional worldwide Data Center Operations (DCO) and Infrastructure Utility Services (IUS) expenditure reached $154 billion of the total data center services market worldwide.

Cloud IT infrastructure sales as a share of overall worldwide IT spending climbed to 37.2% in 4Q16, up from 33.4% a year ago, according to IDC.

As you can see, although Cloud IT spending has been growing, it has not yet grown to even half of worldwide IT infrastructure spending. The bulk of spending is still going towards traditional infrastructure.

With so much money still going towards building their own infrastructure, it will be extremely difficult for companies to make a complete move to public cloud because it essentially makes their existing investments redundant. And that’s not something that’ll go down well with stakeholders, unless public cloud pricing reaches that tipping point where being on the cloud starts to pay for itself and then some.

Lack of Talent

Find a good IT resource is difficult, and if you are looking for talent to work on new technologies, things are going to be even more difficult. Cloud is growing, but for companies to find talent that can address their requirements after moving to public cloud is not an easy job.

“According to the Hays Global Skills Index, last year marked the fifth consecutive year of a rising UK skills shortage, particularly in the technical engineering and specialist technology roles into which cloud skills fall,” Cindy Rose, Microsoft UK Chief Executive, told the Microsoft Tech Summit in Birmingham.

Multi-cloud is Easy to Talk About but Hard to Implement

The recent Amazon outage took down the Internet with it because so many big applications were hosted in the AWS US-EAST-1 region that was affected. But it also made it glaringly obvious that some of the big companies had no backup plan, and no ability to quickly reroute traffic when a region failed.

Problems such as Vendor lock-in and outages can be easily addressed with a multi-cloud approach, where your application is hosted on at least two platforms (for example, Amazon Web Services and Microsoft Azure), but it’s easier said than done.

Even finding the right talent to manage your cloud operations is difficult, but to find talent that can simultaneously develop and manage multiple platforms and frameworks is extremely challenging.

Multi-cloud needs a ground-up approach, and companies need to do a lot of work to implement and keep working on it to stay the course. In turn, this will actually increase the overall cost of the company’s public cloud expenditure.

Compliance and Regulatory Concerns

For a lot of industry segments such as banking and financial services, data sovereignty is an important issue. These companies will always want to stay in control of their data, and need to know exactly where the data is stored. As privacy laws around the world get stronger and tighter, a lot of countries are mandating that companies keep customer data within their borders.

Big banks have, so far, sat on the cloud sidelines because of this issue, but the rate at which big cloud service providers are building datacenters around the world, the data sovereignty issue is on the way to vanishing from the problems list for cloud.

Compliance and regulatory issues will remain, and so will security, and the onus will be on cloud service providers to address those concerns and enable these industry segments to embrace the cloud.

Security Angst

This is one headache that will never go away. Security is always an issue whether you are on the cloud or not. Data theft and breaches are common occurrences in today’s world, and will remain so even 100 years from now. Amazon, Microsoft, Google and IBM constantly work on improving security features that they can offer for their cloud services, but will it be enough? Never, we say.

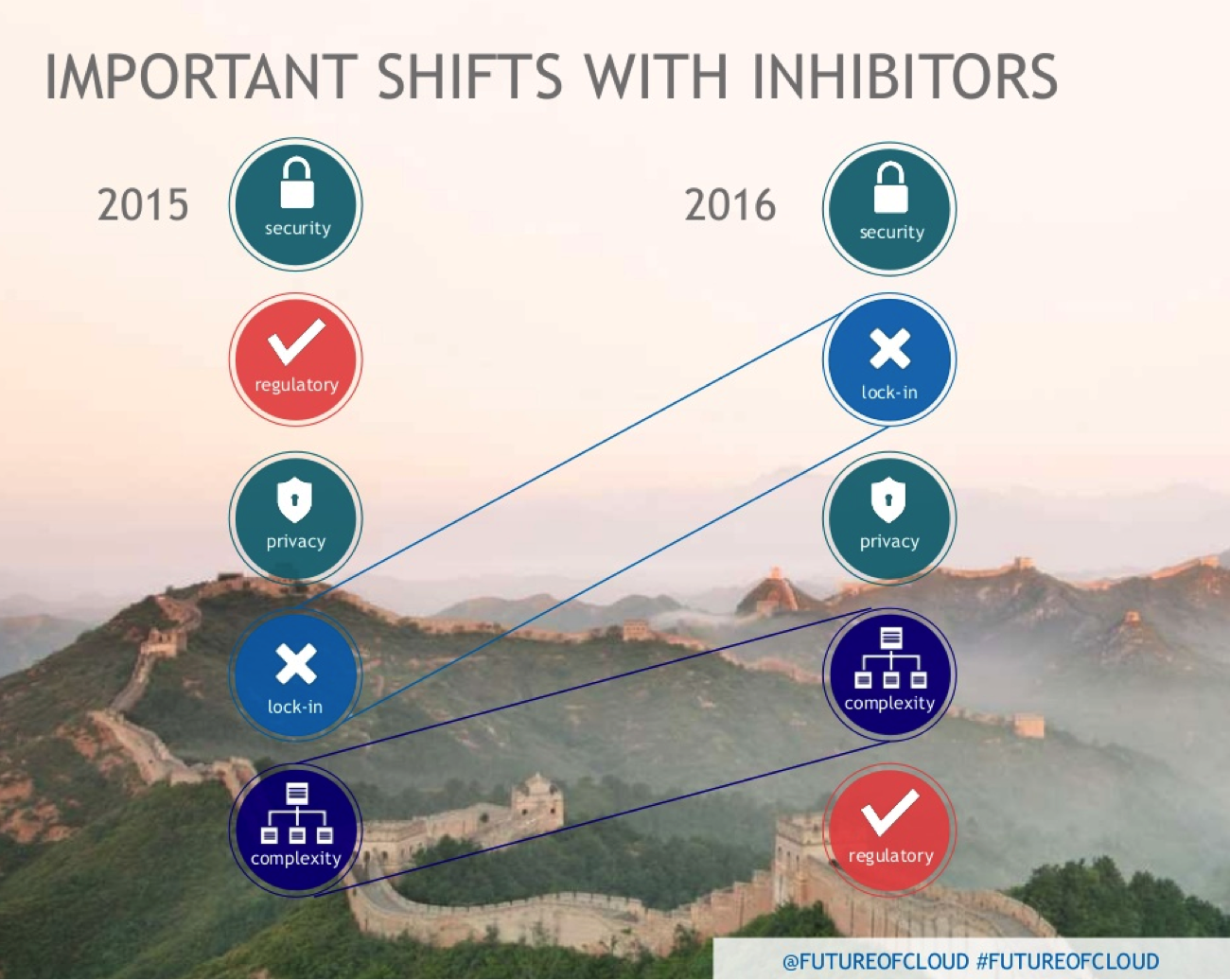

According to the Future of Cloud Computing survey by Wikibon and Northbridge, Security has remained the top inhibitor in the last two years, and it will continue to remain a major challenge to cloud adoption.

Hybrid Offers the Biggest Cloud Benefits with the Fewest Headaches

Possibly the biggest case for hybrid cloud adoption is made by the fact that moving to this model of cloud deployment addresses the majority of the 9 challenges we just described. The hybrid cloud approach allows companies to avoid – or, at the very least, reduce to a great extent – nearly all those factors impeding cloud adoption.

Gartner says that by 2020, 90% of organisations will have hybrid management capabilities, and we just gave you ten reasons why we think that Gartner’s prediction has a good chance of proving prophetic.

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687 |

+91 8409492687 |  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com