Upgrade & Secure Your Future with DevOps, SRE, DevSecOps, MLOps!

We spend hours on Instagram and YouTube and waste money on coffee and fast food, but won’t spend 30 minutes a day learning skills to boost our careers.

Master in DevOps, SRE, DevSecOps & MLOps!

Learn from Guru Rajesh Kumar and double your salary in just one year.

Source:-https://containerjournal.com/

As I explained in my prior blog, Containers Practices Gap Assessment, containerizing software is valuable for DevOps.

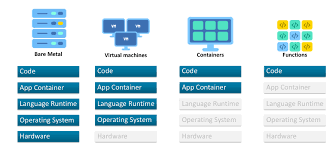

Yet, as I work with clients on their DevOps journeys, I continue to encounter organizations that haven’t grasped the importance of using containers relative to bare metal, virtual machines (VMs) and serverless computing solutions. This article is intended to help organizations understand the difference and the advantages that containers offer for DevOps.

The workhorse of IT is the computer server on which software application stacks run. The server consists of an operating system, computing, memory, storage and network access capabilities; often referred to as a computer machine or just “machine.”

A bare metal machine is a dedicated server using dedicated hardware. Data centers have many bare metal servers that are racked and stacked in clusters, all interconnected through switches and routers. Human and automated users of a data center access the machines through access servers, high security firewalls and load balancers.

The virtual machine introduced an operating system simulation layer between the bare metal server’s operating system and the application, so one bare metal server can support more than one application stack with a variety of operating systems.

This provides a layer of abstraction that allows the servers in a data center to be software-configured and repurposed on demand. In this way, a virtual machine can be scaled horizontally, by configuring multiple parallel machines, or vertically, by configuring machines to allocate more power to a virtual machine.

One of the problems with virtual machines is that the virtual operating system simulation layer is quite “thick,” and the time required to load and configure each VM typically takes some time. In a DevOps environment, changes occur frequently. This load and configuration time is important, because failures that occur during the load and configuration can further delay the instantiation of the VM and the application.

The idempotent characteristic of modern configuration management tools such as Puppet, Chef, Ansible and SaltStack do help to reduce the chance for errors, but when configuration errors are detected, delays to reload and reconfigure the stacks take time. These delays constitute significant delays for each DevOps stage, and the accumulated time for the series of stages needed for a DevOps pipeline can be a significant bottleneck.

VMs are used in production IT environments because of the flexibility to support different configurations. However, the time to reload and configure VMs can be a bottleneck during release reconfigurations, and can delay mean-time-to-repair when failures occur.

Containers, such as those supported by Docker, have a very lightweight operating system simulation layer (the Docker ecosystem) that is tailored specifically to each application stack. Container systems such as Docker guarantee isolation between application stacks run over the same Docker layer.

Compared to VMs, the smaller “footprint” of containerized application stacks allows more application stacks to run on one bare metal machine or virtual machines, and they can be instantiated in seconds rather than minutes. These desirable characteristics make it easy to justify a migration from running an application stack on bare metal machines or VMs. Instead of time-consuming loading and configuring an application stack and OS layer for each machine, instantiation of a complete container image is loaded, and a small number of configuration variables are instantiated in seconds. This capability to quickly create and release containerized applications allows for the infrastructure to be immutable.

In thirty minutes, someone can go from having nothing on their machine to a full dev environment and the ability to commit and deploy. Containers are the fastest means to launch short-lived, purpose-built testing sandboxes as part of a continuous integration process.

Containers are the result of a build pipeline (artifacts-to-workload). Test tools and test artifacts should be kept in separate test containers. Containers include minimal runtime requirements of the application. An application and its dependencies bundled into a container is independent of the host version of the Linux kernel, platform distribution or deployment model.

Benefits of Containers

Benefits of containers include the following:

The small size of a container allows for quick deployment.

Containers are portable across machines.

Containers are easy to track and it is easy to compare versions.

More containers than virtual machines can run simultaneously on host machines.

The challenge with containers is to get your application stack working with Docker. Making containers for a specific application stack is a project easily justified by the ROI of reduced infrastructure costs and the benefits provided by immutable infrastructures such as fast mean-time-to-restore-service (MTTRS) during failure events.

Containerized infrastructure environments sit between the host server (whether it’s virtual or bare metal) and the application. This offers advantages compared to legacy or traditional infrastructure. Containerized applications start faster because you do not have to boot an entire server.

Containerized application deployments also are “denser,” because containers don’t require you to virtualize a complete operating system. Containerized applications are more scalable because of the ease of spinning up new containers.

Recommended Container Engineering Practices

Here are some recommended engineering practices for containerized infrastructure:

Containers are decoupled from infrastructure.

Container deployments declare resources needed (storage, compute, memory, network).

Place specialized hardware containers in their own cluster.

Use smaller clusters to reduce complexity between teams.

Serverless computing is a cloud computing execution model in which the cloud provider runs the server and dynamically manages the allocation of machine resources. Pricing is based on the actual resources consumed by an application, rather than on pre-purchased units of capacity. Serverless computing can simplify the process of deploying code into production. Scaling, capacity planning and maintenance operations may be hidden from the developer or operator. Serverless code can be used in conjunction with code deployed in traditional styles, such as microservices. Alternatively, applications can be written to be purely serverless and use no provisioned servers at all.

What This Means

This blog explained how containers compare to bare metal, virtual machines and serverless solutions. Those who are serious about DevOps would do well to learn about the advantages that containers offer as part of a DevOps solution. To learn more about containers, refer to my book Engineering DevOps.

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687 |

+91 8409492687 |  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com